Exploring and Understanding Law Enforcement’s Relationship with Technology: A Qualitative Interview Study of Police Officers in North Carolina

Abstract

:Featured Application

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants and Setting

2.2. Data Collection

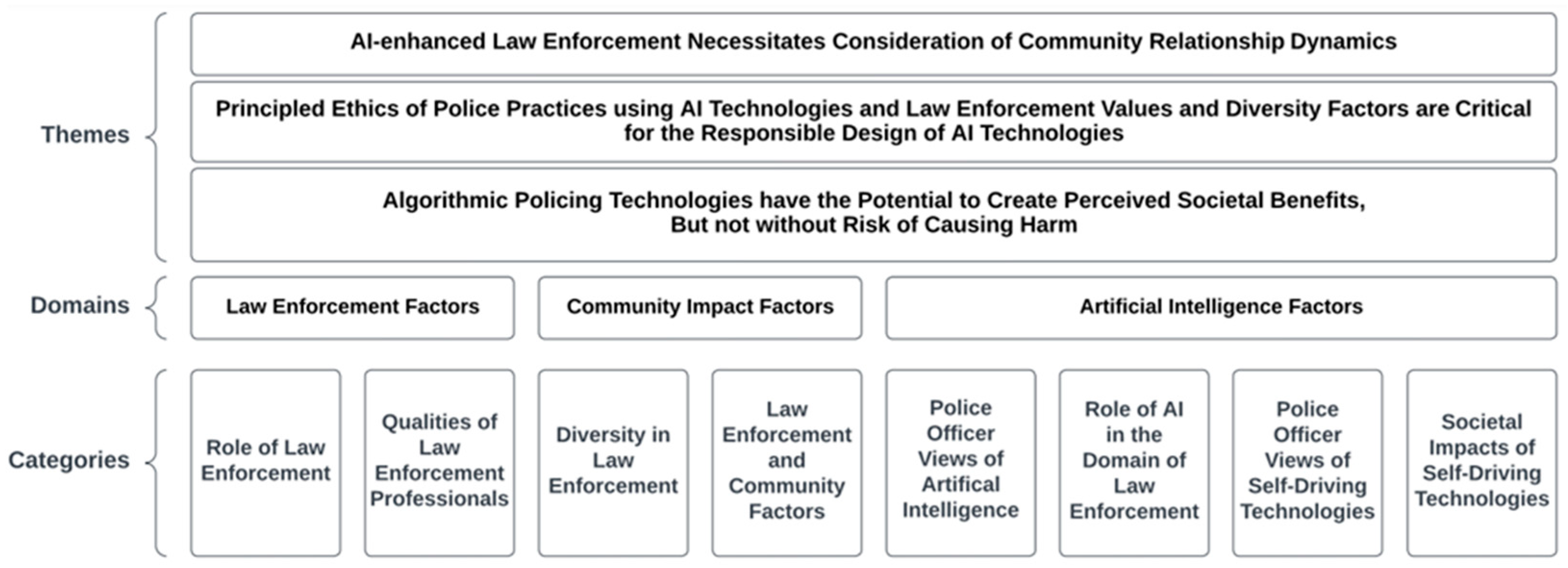

3. Results

3.1. Role of Law Enforcement

Serve and Protect: “Well, in my opinion, I mean, we are still on the front lines of protecting, but we also forget that second part of serving, right? So, most agencies have it somewhere in their motto or code of ethics or something like that, serving and protecting.”(Police Officer 14)

Managing Public Safety: “My particular role now is to keep the highways safe, keep them clear of obstruction, assist motorists, whether they have struck something in the road or someone else has struck them, and remove impaired drivers from the road. I do my little part in the big wheel of law enforcement in general.”(Police Officer 7)

3.2. Qualities of Law Enforcement Professionals

Integrity: “Of course, integrity, based on what we do, I mean, integrity is something that is required, and a lot of it has to do with the fact that we’re dealing with a lot of individuals who would be easy to manipulate or take advantage of, or steal from, and having that integrity aspect of characteristics would put the officers in a position where they know what they’re doing could be harmful to the individuals, and blatantly obvious immoral behavior such as stealing, hurting others, things like that. The definition I had one time of integrity was doing the right thing when nobody’s looking, but I disagree with that. Mine is, doing the right thing, not caring who’s looking, like you know, got to do the right thing whether they’re looking or not. So, I think integrity is a big deal.”(Police Officer 5)

Honesty: “You have to be honest because the citizens have to trust you. You are put in a position where you are the voice of the disenfranchised, those who cannot speak. You are there for the victims and the witnesses who are scared or cannot speak for themselves, so you have to do it for them. And if you are not honest, then you cannot do that.”(Police Officer 18)

Split-Second Decision-Making (Indecisiveness): “And with decision-making […] some people have a hard time making a decision in a split-second, or making a decision under duress, under force, and that will get people killed if they hesitate to try to make a decision.”(Police Officer 1)

3.3. Diversity in Law Enforcement

Community Representation: “Yeah, it’s important, you want an agency that looks like the people that we serve. So, if you have a predominant demographic, predominant race […] then that should probably […] be the predominant race or demographic in that agency, because if we serve the community, so we must be part of the community. So, we should look like the community.”(Police Officer 18)

Balancing Diversity with Qualifications: “Well, I am of the opinion that [we should recruit] the best person for the job […] regardless of the background. So, I do want to put that out there. I do not believe we should be hiring just because, or promoting, if you want to say that, promoting or hiring or anything along that, just because of racial or ethnicity issues. But getting back to your question, some of the problems that I think could arise is that we can get one-dimensional. Let’s say we have very little diversity in one agency. Their experience levels and their background levels are not going to be as vast and expansive as it would if we are able to bring in different backgrounds and different ethnicities and different genders.”(Police Officer 14)

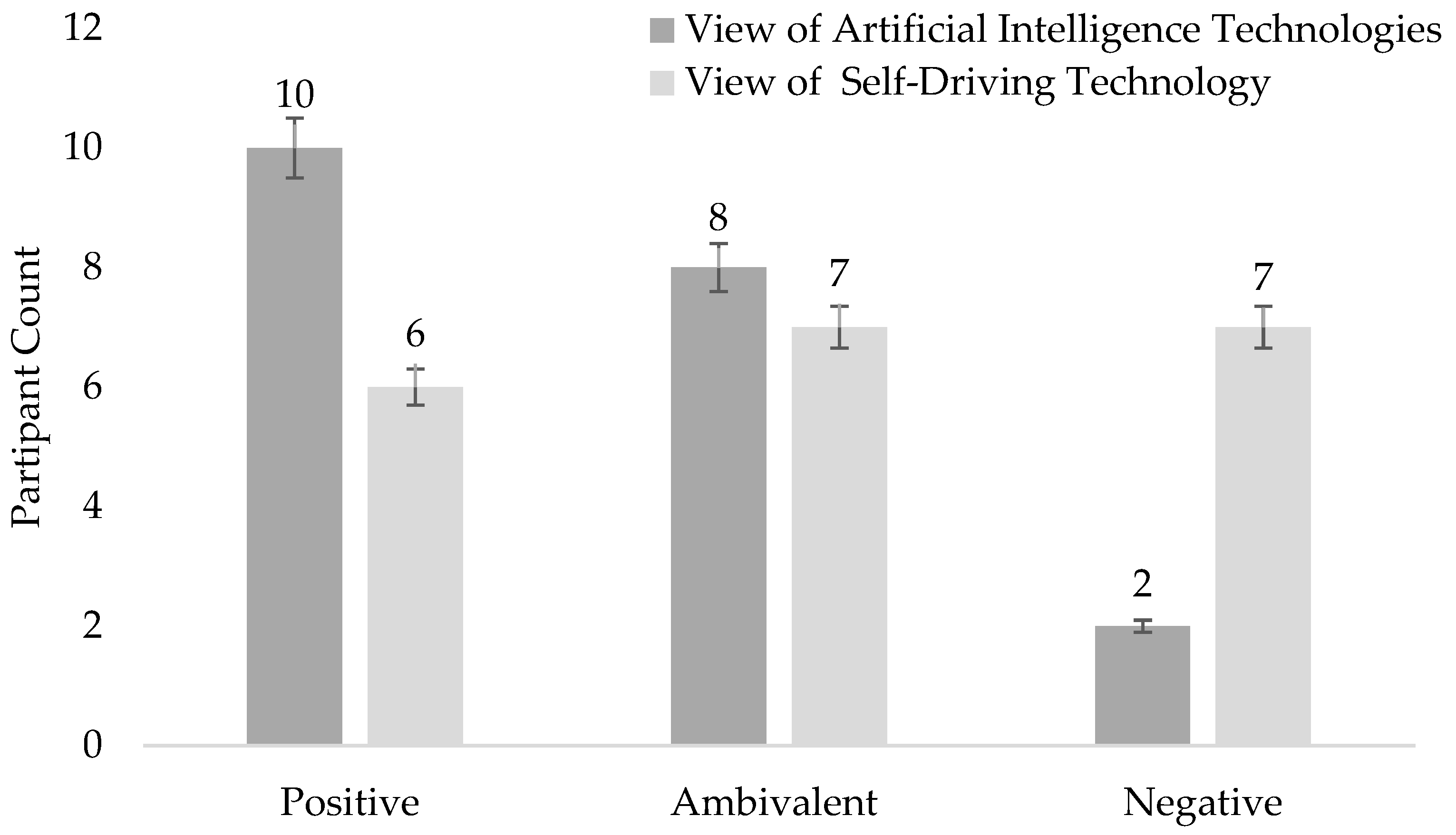

3.4. View of Artificial Intelligence (AI) Technologies

3.5. View of Self-Driving Technology

3.6. Role of Artificial Intelligence in Policing

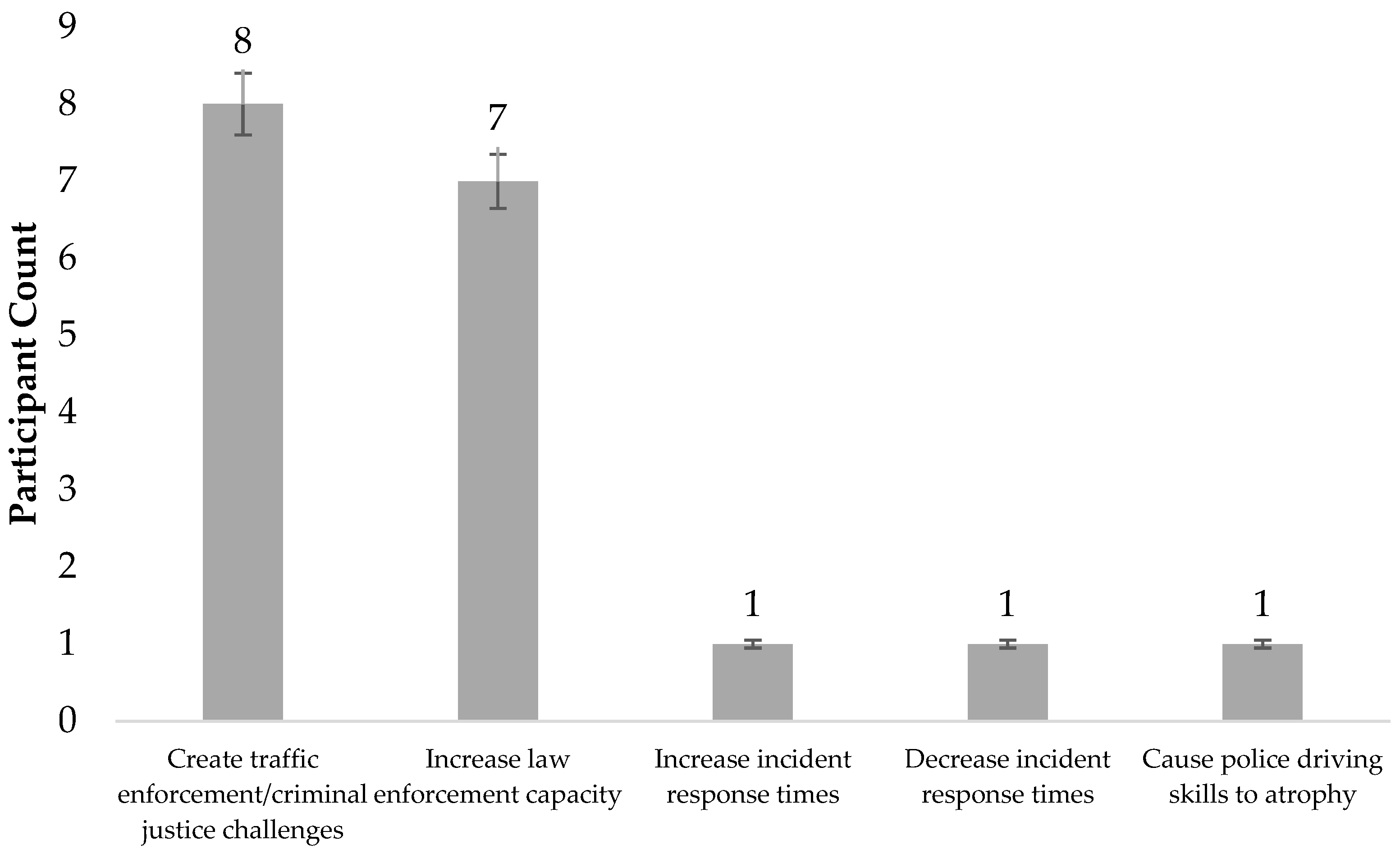

3.7. Societal Impacts of Self-Driving Technology

Public Safety (Reduce Driver Operator Error): “I am open to it because a very, very high percentage of accidents are based on driver error. So very little is vehicle problems, and very little is environmental problems. The rest of it is going to be on the driver. So, I would think that this will help […] I have been to a lot of accidents and the overwhelming majority of it is because of an error on the part of the driver. So, I am hopeful that this will actually help and be beneficial to safety.”(Police Officer 14)

Accountability Concerns: “I would say whoever is sitting technically in the driver’s seat because I would look at it as like a plane, if you are flying a plane that is on autopilot and the autopilot messes up, you as the pilot have to step in and take over the plane. So, if you are in a car that has self-driving technology and it starts messing up, you must step in and take over. So, you still must be paying attention, you cannot just hit auto drive and take a nap.”(Police Officer 18)

3.8. View of Self-Driving Technology

Increase Law Enforcement Capacity (Reallocate Human Resources): “Well, we investigate probably 125 motor vehicle collisions every single month. So mathematically, you are talking about, well over a thousand wrecks a year. So, if you could substantially reduce those crashes, that’s a lot of man hours that officers are not have having to investigate those crashes […] Instead of them investigating crashes, they are doing something else.”(Police Officer 16)

Criminal Justice Challenges of AVs: “[…] I am still going to charge them. I mean, they are in the vehicle, they are supposed to be at least in some kind of control of the vehicle, whether they are touching the steering wheel or hitting the gas or not. Obviously, I do not know how that is going to work when it comes time to convict him. I mean, I am sure somebody will come up with some kind of defense where it is not the person’s fault, it is somebody else’s fault of course.”(Police Officer 3)

Atrophy of Police Driving Skills: “[…] where you run into issues is if someone does not drive because they are using that program, that car all the time, and then all of a sudden, they have to drive, I could see that could cause problems. Especially if you are doing some type of high-risk maneuver.”(Police Officer 12)

4. Discussion

4.1. The Intersection of Law Officer Qualities with Artificial Intelligence

4.2. Artificial Intelligence (AI) Technologies as Public Safety Goods

4.3. Self-Driving (Autonomous) Vehicles: Implications for Society and Policing

5. Policy Implications

6. Limitations

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

References

- Alikhademi, K.; Drobina, E.; Prioleau, D.; Richardson, B.; Purves, D.; Gilbert, J.E. A Review of Predictive Policing from the Perspective of Fairness. Artif. Intell. Law 2022, 30, 1–17. [Google Scholar] [CrossRef]

- Berk, R.A. Artificial Intelligence, Predictive Policing, and Risk Assessment for Law Enforcement. Annu. Rev. Criminol. 2021, 4, 209–237. [Google Scholar] [CrossRef]

- Ouchchy, L.; Coin, A.; Dubljević, V. AI in the headlines: The Portrayal of the Ethical Issues of Artificial Intelligence in the Media. AI & Soc. 2020, 35, 927–936. [Google Scholar] [CrossRef] [Green Version]

- Paulsen, J.E. AI, Trustworthiness, and the Digital Dirty Harry Problem. Nord. J. Stud. Polic. 2021, 8, 1–19. [Google Scholar] [CrossRef]

- Vestby, A.; Vestby, J. Machine Learning and the Police: Asking the Right Questions. Polic. A J. Policy Pract. 2021, 15, 44–58. [Google Scholar] [CrossRef] [Green Version]

- Vinuesa, R.; Azizpour, H.; Leite, I.; Balaam, M.; Dignum, V.; Domisch, S.; Felländer, A.; Langhans, S.D.; Tegmark, M.; Nerini, F.F. The Role of Artificial Intelligence in Achieving the Sustainable Development Goals. Nat. Commun. 2020, 11, 233. [Google Scholar] [CrossRef] [Green Version]

- Morin, R.; Parker, K.; Stepler, R.; Mercer, A. Behind the Badge: Amid Protests and Calls for Reform, How View Their Jobs, Key Issues and Recent Fatal Encounters between Blacks and Police. Pew Research Center. 2017, pp. 1–96. Available online: https://www.pewresearch.org/social-trends/2017/01/11/behind-the-badge/ (accessed on 1 June 2022).

- Tyler, T.R. Enhancing Police Legitimacy. ANNALS Am. Acad. Political Soc. Sci. 2004, 593, 84–99. [Google Scholar] [CrossRef]

- Cortright, C.E.; McCann, W.; Willits, D.; Hemmens, C.; Stohr, M.K. An Analysis of State Statutes Regarding the Role of Law Enforcement. Crim. Justice Policy Rev. 2020, 31, 103–132. [Google Scholar] [CrossRef]

- Meijer, A.; Wessels, M. Predictive Policing: Review of Benefits and Drawbacks. Int. J. Public Adm. 2019, 42, 1031–1039. [Google Scholar] [CrossRef] [Green Version]

- Minocher, X.; Randall, C. Predictable policing: New technology, old bias, and future resistance in big data surveillance. Convergence 2020, 26, 1108–1124. [Google Scholar] [CrossRef]

- Matulionyte, R.; Hanif, A. A call for more explainable AI in law enforcement. SSRN Electron. J. 2021, 1–6. [Google Scholar] [CrossRef]

- Yen, C.P.; Hung, T.W. Achieving Equity with Predictive Policing Algorithms: A Social Safety Net Perspective. Sci. Eng. Ethics 2021, 27, 1–16. [Google Scholar] [CrossRef] [PubMed]

- Marx, G.T. Ethics for the New Surveillance. Inf. Soc. 1998, 14, 171–185. [Google Scholar] [CrossRef]

- DeCew, J.W. In Pursuit of Privacy: Law, Ethics, and the Rise of Technology; Cornell University Press: New York, NY, USA, 1997. [Google Scholar]

- Adams, I.; Mastracci, S. Visibility is a Trap: The Ethics of Police Body-worn Cameras and Control. Adm. Theory Prax. 2017, 39, 313–328. [Google Scholar] [CrossRef]

- Office of Community Oriented Policing Services. President’s Task Force on 21st Century Policing. In Final Report of the President’s Task Force on 21st Century Policing; Office of Community Oriented Policing Services: Washington, DC, USA, 2015; pp. 1–36. Available online: https://cops.usdoj.gov/RIC/Publications/cops-p341-pub.pdf (accessed on 1 June 2022).

- Timmermans, S.; Tavory, I. Theory Construction in Qualitative Research: From Grounded Theory to Abductive Analysis. Sociol. Theory 2012, 30, 167–186. [Google Scholar] [CrossRef]

- Kopak, A. Lights, Cameras, Action: A Mixed Methods Analysis of Police Perceptions of Citizens Who Video Record Officers in the Line of Duty in the United States. Int. J. Crim. Justice Sci. 2014, 9, 225–240. Available online: https://www.proquest.com/scholarly-journals/lights-cameras-action-mixed-methods-analysis/docview/1676622041/se-2 (accessed on 1 December 2022).

- Atkinson, R.; Flint, J. Accessing Hidden and Hard-to-Reach Populations: Snowball Research Strategies. Soc. Res. Update 2001, 33, 1–4. [Google Scholar]

- Coin, A.; Mulder, M.; Dubljević, V. Ethical Aspects of BCI Technology: What is the State of the Art? Philosophies 2020, 5, 31. [Google Scholar] [CrossRef]

- Gardner, A.M.; Scott, K.M. Census of State and Local Law Enforcement Agencies, 2018—Statistical Tables. U.S. Department of Justice, 2022. Available online: https://bjs.ojp.gov/sites/g/files/xyckuh236/files/media/document/csllea18st.pdf (accessed on 1 December 2022).

- Weichselbaum, S.; Schwartzapfel, B. When veterans become cops, some bring war home. USA Today, 2017. Available online: https://www.usatoday.com/story/news/2017/03/30/when-veterans-become-cops-some-bring-war-home/99349228/(accessed on 1 November 2022).

- Anderson, M.; Barthel, M.; Perrin, A.; Vogels, E.A. #BlackLivesMatter Surges on Twitter after George Floyd’s Death. Pew Research Center. 2020. Available online: https://www.pewresearch.org/fact-tank/2020/06/10/blacklivesmatter-surges-on-twitter-after-george-floyds-death/ (accessed on 1 November 2022).

- Cowell, M.; Corsi, C.; Johnson, T.; Brinkley-Rubinstein, L. The Factors that Motivate Law Enforcement’s Use of Force: A Systematic Review. Am. J. Community Psychol. 2021, 67, 142–151. [Google Scholar] [CrossRef]

- Peeples, L. What the data say about police brutality and racial bias—and which reforms might work. Nature 2021, 583, 22–24. [Google Scholar] [CrossRef]

- Bigman, Y.E.; Gray, K. People are Averse to Machines Making Moral Decisions. Cognition 2018, 181, 21–34. [Google Scholar] [CrossRef]

- Shank, D.B.; North, M.; Arnold, C.; Gamez, P. Can Mind Perception Explain Virtuous Character Judgments of Artificial Intelligence? Technol. Mind Behav. 2021, 2, 1–38. [Google Scholar] [CrossRef]

- Kim, H.S. Decision-Making in Artificial Intelligence: Is It Always Correct? J. Korean Med. Sci. 2020, 35, 1–3. [Google Scholar] [CrossRef]

- Rigano, C. Using Artificial Intelligence to Address Criminal Justice Needs. Natl. Inst. Justice (NIJ) J. 2019, 1–10. Available online: https://www.nij.gov/journals/280/Pages/using-artificialintelligence-to-address-criminal-justice-needs.aspx (accessed on 1 December 2022).

- Bauer, W.A.; Dubljević, V. AI Assistants and the Paradox of Internal Automaticity. Neuroethics 2020, 13, 303–310. [Google Scholar] [CrossRef]

- Dubljević, V. Neuroethics, Justice, and Autonomy: Public Reason in the Cognitive Enhancement Debate; Springer: Cham, Switzerland, 2019. [Google Scholar]

- Othman, K. Public Acceptance and Perception of Autonomous Vehicles: A Comprehensive Review. AI Ethics 2021, 1, 355–387. [Google Scholar] [CrossRef] [PubMed]

- Dubljević, V. Toward Implementing the ADC Model of Moral Judgment in Autonomous Vehicles. Sci. Eng. Ethics 2020, 26, 2461–2472. [Google Scholar] [CrossRef]

- Dubljević, V.; Sattler, S.; Racine, E. Deciphering Moral Intuition: How Agents, Deeds, and Consequences Influence Moral Judgment. PLoS ONE 2018, 13, e0204631. [Google Scholar] [CrossRef] [Green Version]

- Bokhari, S.A.A.; Myeong, S. Use of Artificial Intelligence in Smart Cities for Smart Decision-Making: A Social Innovation Perspective. Sustainability 2022, 14, 620. [Google Scholar] [CrossRef]

- Dubljević, V.; Venero, C.; Knafo, S. What is Cognitive Enhancement? In Cognitive Enhancement; Knafo, S., Venero, C., Eds.; Elsevier: Amsterdam, The Netherlands; Academic Press: London, UK, 2015; pp. 1–9. [Google Scholar]

- Urquhart, L.; Miranda, D. Policing Faces: The Present and Future of Intelligent Facial Surveillance. Inf. Commun. Technol. Law 2022, 31, 194–219. [Google Scholar] [CrossRef]

| Demographic Characteristic | Interview Sample N = 20 | ||

|---|---|---|---|

| Frequency | Percentage | Mean (SD) | |

| Gender | |||

| Male | 16 | 80% | |

| Female | 4 | 20% | |

| Tenure: Experience (years) | 18.8 (6.34) | ||

| 5 years or less | 1 | 5% | |

| 6 to 10 years | 2 | 10% | |

| 10 to 19 years | 9 | 45% | |

| 20 years or more | 8 | 40% | |

| Education Level 1 | |||

| High School Diploma or (G.E.D.) | 12 | 60% | |

| Undergraduate Degree | 7 | 35% | |

| Postgraduate Degree | 1 | 5% | |

| Military Experience | |||

| Yes | 6 | 30% | |

| No | 14 | 70% | |

| Law Enforcement Role | |||

| Line/Patrol Officer | 5 | 25% | |

| Special Agent | 1 | 5% | |

| Supervisory Position | 10 | 50% | |

| Training | 3 | 15% | |

| Senior Leadership | 1 | 5% | |

| Code | Participant | Excerpt |

|---|---|---|

| Positive View of AI Technologies | PO3 * | “Yeah, I mean, I am all for technology. I mean, especially in law enforcement. I think, and of course I cannot speak for all law enforcement agencies, but I think most of the agencies are kind of behind on the times. And that all obviously has to do with money, being able to get grants, to get the technology. But anything that can help a law enforcement officer carry out his job, duty, or help the agency carry out their mission, I think is a good thing and I think it’s helpful.” |

| Negative View of AI Technologies | PO13 | “But, to me, there is no substitute for old school investigation of going and talking to somebody. I think you are going to make the problem even worse than what we have right now by doing that because I think everybody is looking at each other as an object, you are A, or you are B or your C, you are not a human being. And I think AI would make that worse. We need to think of each other.” |

| Ambivalent View of AI Technologies | PO8 | “I think it needs to be pretty much studied more so that we can be certain of the efficacy. I am not really sold. I do think it would free up and allow manpower or increase officer ability to spend their time doing other things. But I am not too sure if I like that it could rid some jobs or just how effective it would be into providing correct information on things that humans can do.” |

| Positive View of Self-Driving Technology | PO16 | “I feel like in a perfect world, that is probably better than people, because with that automatic driving technology, everything does what it is supposed to do, whereas when humans drive, nobody does what they are supposed to do. People drive at different speeds, they have different following distances and that is what creates all the problems, but if everybody drives the same speed and has the same following distance, you probably will never have wrecks or have very few of them.” |

| Negative View of Self-Driving Technology | PO13 | “Oh, I absolutely hate it. Yeah, I am not a fan. I like driving for one and I do not trust computers that much. [...] I do not believe in putting an Alexa in your house to hear everything that you say or do is recorded, because there is so many ways to hack in now. What if say, you are driving your Tesla down the road and somebody hacks in and next thing you know, you crash. I don’t like it. I like driving. I have always liked driving since I was young, and I would not trust it.” |

| Ambivalent View of Self-Driving Technology | PO1 | “I think the passive technologies that are here now with the light mitigation and stop mitigation, and anti-backing when it stops you from backing over somebody, I think that’s great. I don’t know, given here recently on TV, I saw a report of a Tesla. I think it was a Tesla that had wrecked, and it was in the self-drive mode. So, I think it’s still got a little bit farther to go before it matures enough to be widespread as they want it to be.” |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dempsey, R.P.; Brunet, J.R.; Dubljević, V. Exploring and Understanding Law Enforcement’s Relationship with Technology: A Qualitative Interview Study of Police Officers in North Carolina. Appl. Sci. 2023, 13, 3887. https://doi.org/10.3390/app13063887

Dempsey RP, Brunet JR, Dubljević V. Exploring and Understanding Law Enforcement’s Relationship with Technology: A Qualitative Interview Study of Police Officers in North Carolina. Applied Sciences. 2023; 13(6):3887. https://doi.org/10.3390/app13063887

Chicago/Turabian StyleDempsey, Ronald P., James R. Brunet, and Veljko Dubljević. 2023. "Exploring and Understanding Law Enforcement’s Relationship with Technology: A Qualitative Interview Study of Police Officers in North Carolina" Applied Sciences 13, no. 6: 3887. https://doi.org/10.3390/app13063887

APA StyleDempsey, R. P., Brunet, J. R., & Dubljević, V. (2023). Exploring and Understanding Law Enforcement’s Relationship with Technology: A Qualitative Interview Study of Police Officers in North Carolina. Applied Sciences, 13(6), 3887. https://doi.org/10.3390/app13063887